Clusters 1 7 2

Introduction

What is a cluster?

Carminat tomtom harita crack. A cluster (as it pertains to computers) refers to a group of servers and other resources that are connected through hardware, networks and software to behave as if they were a single system. There are many reasons this is an attractive thing to do, even for smaller enterprises. These reasons include: high availability, load balancing, parallel processing, systems management and scalability. We'll talk more about each of these applications later in this course. But the main thing to understand right now about clustering is that its goal is to make a group of computers appear (to the outside world and to internal user departments) as if they were one, totally integrated system that is always available and always working fast!

A little history, please?

MySQL Cluster is a write-scalable, real-time, ACID-compliant transactional database, designed to deliver 99.999% availability. With a distributed, multi-master architecture and no single point of failure, MySQL Cluster scales horizontally on commodity hardware with auto-sharding (partitioning) to serve read and write intensive workloads, accessed via SQL and NoSQL interfaces. Gopanel linux web server manager 1 9 2. Learn cluster 7 with free interactive flashcards. Choose from 500 different sets of cluster 7 flashcards on Quizlet. 12.2.0.1.180417, 12.1.0.2.180417, 11.2.0.1.0.180417 Templates released Latest Oracle Linux 7 Update 5 & Oracle Linux 6 Update 9 with UEK Release 4 Update 7 includes latest fixes (Spectre/Meltdown). Deploycluster tool provides fully automated end-to-end Single Instance and Oracle Real Application Clusters deployments on Oracle VM 3.

For those who are interested, a tidbit of history on the genesis and evolution of clustering might be of interest.

Early on, clustering was almost exclusively synonymous with the need to provide scalable solutions for a growing enterprise. In fact, as recently as 1997, a bunch of RS/6000s were clustered together to provide the needed scalability for the famous 'Deep Blue' chess championship that pitted an electronic brain against the incredible brilliance of Gary Kasparov's 'organic' brain. (Deep Blue won, by the way.)

Today's 'killer' clustering applications

Today, however, individual servers from almost any vendor are generally quite scalable in their own right, so scalability has now become merely an 'oh, by the way.' benefit of installing a cluster that is primarily intended to provide another benefit. And you may be asking, 'What might the driving reasons be today for installing a clustering solution?' The answer (to repeat the message delivered earlier on this page) is simple: AVAILABILITY and FAST RESPONSE (load balancing) for all types of users!

Why are clusters so important?

High Availability: The need to have core systems and their concomitant Web front-end servers running literally all the time means that high availability is imperative. This is where clustering is a brilliant solution. Clustering provides transparent backup and failover, and delivers redundancy of systems, peripherals and data — all of which are critical to sustaining a 24x7x365 IS arena.

Server Integration: Additionally, a Web-based business environment also means that enterprises can no longer expect a single, gigantic 'computer in the sky' to solve all its processing needs — even though a server can probably be purchased to handle a company's total 'volumes' (in regard to data storage, etc.). Many applications require that the server resources be tweaked in different ways to achieve various purposes. Generally, there are three classes of workloads that require different server dynamics:

- Data transaction servers manage core business processes.

- Web application servers manage the end-user experience.

- Appliance servers manage specific network functions.

Yet all the applications that run within these different classes of workloads (and which, therefore, are probably implemented on separate servers) must access, share and update each other's data in realtime.

Adobe premiere elements 8 review. It is also true that functions that have traditionally been directly connected to individual servers, such as network connections and storage, are becoming centrally deployed so as to deliver more efficient utilization, easier scalability and greater flexibility.

Clustering elegantly solves the problem of integrating these three types of servers along with centralized network and storage resources. And by the way, clustering also allows the IS folks to manage these separate servers as if they were one.

High Availability

Does Your Organization Need High Availability Clustering?

Availability can be measured relative to '100% operational' or 'never failing.' Have you ever heard someone refer to 'five 9s'? This is a metric that refers to system availability by the number of '9s.' One 9 is 90% uptime, two 9s are 99%, three 9s are 99.9%, etc. Four-9 uptime would mean that your system has less than one hour of downtime per year. Five 9s are currently considered the entry point for high availability solutions. 'Five 9s (which represents 5.39 minutes of annual downtime) would be considered the Holy Grail of high-availability for distributed systems,' according to Ron Anderson, who writes of 'OSes & Network Services.' He says, 'Five-9 uptime is expensive and complex, but worth the cost and effort if your e-business lives or dies by the second — as Amazon.com does, for example.'

Let's look at the chart on this page to better understand the costs that can be associated with not having a high-availability clustering system in place. As you can see, computer downtime can cost millions of dollars. Fault-tolerance costs continue to seem more palatable to senior management as an ever-increasing number of businesses begin to experience the costs associated with not having invested in high-availability measures.

Cluster technology helps bring availability and performance to the traditional data center model. Formalized service levels within corporations and between businesses are becoming standard practice. Availability, performance, and reliability are chief among the metrics measured. Continuous availability on a technical level tends to reduce business planning costs. This would include application availability, batch versus backup contention, decision support systems, use of assets for something other than just disaster recovery and point-in-time reconstruction. These issues lead to cost avoidance, but are very much tangible costs that must be accounted for in the equation.

Redundancy. Resiliency. What's in a Name?

Since a computer system consists of many parts, all of which usually need to be present in order for the whole to be operational, much planning for high availability centers around backup and failover processing and data storage and access. For storage, a redundant array of independent disks (RAID) is one approach. A more recent approach is the storage area network (SAN). A SAN is a high-speed special-purpose network that interconnects various types of data storage devices with data servers on behalf of a larger network of users.

To achieve continuous availability, more than just robust system availability is needed. Critical data and critical applications must also be resilient to outages. Both must be accessible across the cluster even when the normal hosting system for the resource fails. A complete solution is achieved when the critical data and the critical applications are made to be resilient resources and are always available. Data resilience ensures that a copy of the data is always accessible to end users of the cluster. Application resilience ensures that the services provided by the application are always accessible to end users of the cluster. Application resilience is provided through IP address takeover and restarting the application on the first backup system.

Similarly, every other point of failure that can interrupt availability must be eliminated. So, redundancy is critical throughout the system, including networks, power and Internet connectivity.

If a system outage or a site loss occurs, the functions provided on a clustered database server system can be switched over to a designated backup system that contains a current copy (replica) of your critical application data or that becomes the primary point of access for the resilient device containing that critical data. The failover can be automatic if a system failure should happen, or you can control how and when the transfer will take place by manually initiating a switch over.

All this redundancy allows not only for failovers, but also for switchovers. A switchover happens if you manually switch access from one system to another. This is highly convenient when you need to perform system maintenance, such as applying program temporary fixes (PTFs), installing a new release, or upgrading your system.

Clustering Configurations for High Availability

The simplest high-availability cluster configuration is a two-node cluster (as shown here using two iSeries servers). There is one primary system for all cluster resources and a second system that is a backup, ready to take over during an outage of the primary system.

Figure 1. Two-node cluster

Another typical environment (also shown here) is the mutual takeover cluster. Each node in this environment serves as the primary node for some sets of resources and as the backup node for other sets of resources. With mutual takeover, every system or node is used for production work, and all critical production work is accessible from multiple systems, multiple nodes, or a cluster.

In both these scenarios, replication is key. Replication means that a copy of something is produced in real time — for instance, copying objects from one node in a cluster to one or more other nodes in the cluster. Replication makes and keeps the objects on your systems identical. If you make a change to an object on one node in a cluster, this change is replicated to other nodes in the cluster.

[NOTE: If you are planning on using two-node clustering, an ultra-conservative design would include a minimum of three systems (as shown here). In this way, you can ensure that you will always have one system to switch over to, even if a backup is in process when the failure occurs on the primary system.]

Many are complementary.

Load balancing: Load balancing involves the divvying up of work that a server has to do between two or more computers so the work gets done more quickly. Load balancing can be implemented with hardware, software, or a combination of both, and is a highly attractive reason to choose a clustering solution. When there is a need to balance workloads coming in from a Web site, one approach is to route each request to a different server host address in a domain name system (DNS) table, round-robin style.

Scalability: In information technology, scalability involves the ability to retain performance levels when seamlessly adding new components as your business growth requires. It seems self-evident that the reason you would need to add processors and disk storage is because business volumes have grown. But in today's Web-driven business environment, business volumes coming from Web servers can become so enormous, so quickly, that scalability can often best be solved through sophisticated clustering and load balancing techniques. This is because scalability now has to support peak demand, not just normal demand.

Capacity on Demand (CoD): Usually referenced in tandem with the need to handle peak demand, CoD actually delivers a new form of horizontal scalability. In this environment, the clustering technology must be able to allocate and deallocate computing resources (e.g., servers, storage), as workload demands grow and change. It must also be able to quickly embrace a new, unplanned service.

Systems management: Simplified administration of servers can be achieved through clustering — effectively allowing you to manage a group of systems as a single system or single database. Features such as intuitive management and administration, detection and recovery from failures, and the ability to add or delete resources without full service interruption are required for today's clustered systems management solutions. Also, even though in the past it was adequate for resource management to be command-driven, now it must be on-demand and fully automated.

High-performance parallel processing: For many applications, program instructions can be divided among multiple processors so that the total running time for the program is dramatically reduced. In symmetric multiprocessing systems (SMP) each processor is equally capable and responsible for managing the flow of work through the system. SMP machines do well on all types of problems, providing the amount of data involved is not too large. Massively parallel processing (MPP) systems can also provide parallel processing capability, and truly excel when used in environments that involve many separate, independent operations on large quantities of data, such as data mining and artificial intelligence tasks. Apropos to this discussion, however, clustering can deliver relatively low-cost parallel processing for scientific and other applications that lend themselves to parallel operations (e.g., database transactions, scientific simulation and data mining).

[NOTE: Perhaps the world's most famous parallel processing application today is the SETI@HOME project. Managed by the University of California at Berkeley, SETI@HOME is running (in the background) on close to 3.5 million personal computers in 226 countries worldwide. Each of these computers is processing digitized 'noise' from outer space in a feverish search to answer the question, 'Is anyone out there?' To date, the total number of processing time aggregately donated by each personal computer owner in this huge parallel processing effort is approaching 800,000 years!]

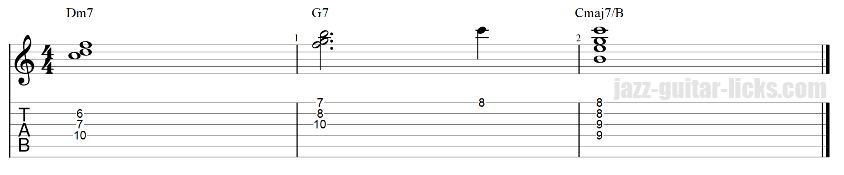

Load-Balancing Cluster Model

With this clustering model, the number of users (or the number of transactions) can be allocated (via a load-balancing algorithm) across a number of application instances (here, we're showing Web application server (WAS) application instances) so as to increase transaction throughput. See Figure 2 below.

Figure 2. Cluster computing models

This model easily scales up as the overall volume of users and transactions goes up. The scale-up happens through simple application replication only, requiring little or no application modification or alteration.

High Availability Cluster Model

This cluster model, as shown in Figure 3, expands on the simple load-balancing model shown in the previous chart. Not only does it provide for load balancing, it also delivers high availability through redundancy of applications and data. This, of course, requires at lease two nodes — a primary and a backup.

Figure 3. Cluster computing models

In this model, the nodes can be active/passive or active/active. In the active/passive scenario, one server is doing most of the work while the second server is spending most of its time on replication work. In the active/active scenario, both servers are doing primary work and both are accomplishing replication tasks so that each server always 'looks' just like the other.

In both instance, instant failover is achievable should the primary node (or the primary node for a particular application) experience a system or application outage.

As with the previous model, this model easily scales up (through application replication) as the overall volume of users and transactions goes up. The scale-up happens through simple application replication, requiring little or no application modification or alteration.

High-performance Parallel Application Cluster -- Technical Model

Figure 4 illustrates extreme vertical scalability is achievable for a single large computing task. The logic shown here is essentially based on the message passing interface (MPI) standard. This model would best be applied to scientific and technical tasks, such as computing artificial intelligence data.

Figure 4. High performance technical computing

In this high performance model, the application is actually 'decomposed' so that segments of its tasks can safely be run in parallel.

High-performance Parallel Application Cluster -- Commercial model

Figure 5 demonstrates the capacity to deliver extreme database scalability within the commercial application arena. In this environment, 'shared nothing' or 'shared disk' might be the requirement 'of the day,' and can be accommodated. You would implement this model in commercial parallel database situations, such as DB2 UDB EEE, Informix XPS or Oracle Parallel Server.

Figure 5. High performance commercial computing

As with the technical high-performance model shown on the previous chart, this high-performance commercial clustering model requires that the application be 'decomposed' so that segments of its tasks can safely be run in parallel.

Workload Consolidation/Common Management Domain Cluster

Figure 6 shows a simple arrangement of heterogeneous server tasks -- but all are running on a single physical system (in different partitions, with different granularities of systems resource allocated to them). One of the major benefits offered by this model it that of convenient and simple systems management -- a single point of control.

Figure 6. Common Management Domain

Additionally, however, this consolidation model offers the benefit of delivering high quality of service (resources) in a cost effective manner.

Select the best hardware for your purposes

Determine what data to replicate. Determining what data you need to replicate is similar to deciding what kind of data you need to backup and save when preparing a backup and recovery strategy. In essence, you must determine which data in your environment is critical to keeping your business up and running. For example, if you are running a business on the Web, your critical data may include: today's orders, inventory data, and customer records. Typically, information that is more static in nature does not have to be replicated on a daily basis. Determining, in advance, what data to replicate is important because it will determine your storage decision.

Decide which storage to use for replication. Key considerations for determining which systems to use for replication are: performance capacity, storage capacity, critical data, and disaster prevention. If your system fails over, you need to know what data and applications are running on the primary system and the backup system. Put the critical data on the system that is most capable of handling the workload in case it fails over. Obviously, you do not want to run out of storage space. However, if your primary system runs out of space and fails over, it is highly likely that the backup system is also going to fail because its storage sizing was similarly calculated. And, in regard to disaster prevention, to ensure your data center is not completely destroyed in case of a natural disaster, such as a flood, tornado, or hurricane, you should locate the replicated system remotely — perhaps even in another state.

Choose the right hardware for clusters. All IBM eServer groups provide systems that can be clustered, so your IBM marketing contact or your IBM business partner should be able to help you with this determination. But the hardware considerations don't stop at the server level. You should provide protection from power losses through an external uninterruptible power supply (UPS) or equivalent. You'll also need to determine what type of communications media is compatible with clustering. Again, your IBM marketing contact or IBM business partner can help with this information. There are also manuals (across all IBM eServer lines) that provide this information.

(NOTE: We will discuss the various IBM server lines in a little more detail further in this course.)

Choose a networking strategy

Clusters 1 7 2 Gezginler

Design the interconnecting nodes. Local area networks (LANs), wide area networks (WANs), OptiConnect system area networks, or any combination of these connectivity devices should be considered as you design your optimal clustering environment. Your choice depends on: volume of transactions, response time requirements, distance between the nodes and cost considerations. You can use these same considerations when determining the connection media between primary and backup locations of resources.

Design the cluster network. Before you configure your networks for clustering, you need to carefully plan and do some initial precluster configuration involving TCP/IP. It is important that you read these topics before configuring your cluster. They will tell you when or how to: set up IP addresses, set TCP/IP attributes, and avoid a cluster partition. There are manuals (across all IBM eServer lines) that provide this information.

Write highly-available cluster applications

A highly-available application is one that can be resilient to a system outage in a clustered environment. Reaper license key. Several levels of application availability are possible:

- If an application error occurs, the application restarts itself on the same node and corrects any potential cause for error (such as corrupt control data). You would view the application as though it had started for the first time.

- The application performs some amount of checkpoint-restart processing. You would view the application as if it were close to the point of failure.

- If a system outage occurs, the application is restarted on a backup server. You would view the application as though it had started for the first time.

- If a system outage occurs, the application is restarted on a backup server and performs some amount of checkpoint-restart processing across the servers. You would view the application as if it were close to the point of failure.

- If a system outage occurs, a coordinated failover of both the application and its associated data to another node or nodes in the cluster would take place. You would view the application as though it had started for the first time.

- If a system outage occurs, a coordinated failover of both the application and its associated data to another node or nodes in the cluster would take place. The application performs some amount of checkpoint-restart processing across the servers. You would view the application as if it were close to the point of failure.

eServer Family of Servers and Clustering

Hardware

IBM understands this multitude of clustering considerations, and has implemented excellent, best-of-brand clustering solutions across its entire family of server brands -- from its Intel processor-based platforms in stand-alone and rack-optimized configurations to powerful UNIX servers, iSeries and mainframes. IBM delivers well respected and diverse cluster technology that meets the rigorous demands of environments such as multi-terabyte databases, large-scale Web serving, and computationally intensive technical workloads reaching trillions of floating point operations per second. This cluster technology encompasses a broad range, as shown in Figure 7 below.

Figure 7. eServer Family of Servers and Clustering -- Hardware

- eServer cross-platform clustering solutions include the Cluster 1350 which combines xSeries rack-optimized servers running the Linux operating system with industry standard networking, robust systems management and world-class service and support to deliver highly scalable, integrated cluster offerings. You can configure storage subsystems to provide even greater system capacity for high performance computing. IBM Cluster Systems Management (CSM) for Linux, included with the Cluster 1350, provides centralized control of the entire cluster and can help reduce administrative costs. The Cluster 1350 fits beautifully in most clustering solutions, regardless of the additional IBM platform(s) with which they are implemented.

- pSeries eServer clustering solutions include the Cluster 1600, which runs with IBM Parallel System Support Programs for AIX (PSSP), or with the new CSM for AIX. PSSP allows for the management of a broad range of pSeries servers and RS/6000 SP nodes under a single cluster name and identification number.

- zSeries eServer clustering solutions are based on Parallel Sysplex technology, which delivers near zero real-time failure detection and recovery. zSeries is at the heart of e-business infrastructure coupling solutions that have been implemented by many of the largest corporations, ensuring maximum performance, real-time responsiveness, application flexibility, and simplified management for data and transaction serving.

- iSeries eServer clustering solutions let you efficiently group servers to achieve near 100% availability. These servers run sophisticated business applications effortlessly and continuously, cutting complexity, slashing costs, and speeding implementation. With OS/400 V5R1, iSeries servers have new high availability clustering features. Support for switchable disk clusters with Independent Auxiliary Storage Pools (IASPs) and Switchable DASD, allows data and applications on an I/O tower to be switched between a primary and backup server. 'ClusterProven for Domino' is also available with IASP and Switched Disk support. There are advanced journaling functions. And, Management Central provides for definition and control of cluster functions.

eServer Family of Servers and Clustering -- Linux

IBM is also demonstrating its commitment to scalable Linux-based super computing through its partnership with leading academic and research institutions, such as the University of New Mexico and the National Center for Super Computer Applications. Leveraging established technology and a diverse portfolio of scientific applications in the new-age, scalable, Linux-based super clustering environment provides researchers with a low-cost platform for developing enhanced clustering management tools to explore the scalability of various types of engineering and scientific applications. IBM is committed to continuing to provide a comprehensive portfolio of Linux and eServer clustered solutions that extend the eServer brand promise of value to customers in a broad range of application segments and industries. These solutions leverage IBM's strong clustering technology heritage to deliver the essential attributes inherent in clustered computing such as quality of service (QoS), capacity on demand (CoD), high availability, security, workload Smart, manageability, as well as vertical and horizontal scalability. See Figure 8 below.

Figure 8. eServer Family of Servers and Clustering -- Linux

IBM is also demonstrating its committment to their Linux customers who demand affordable open source high-availability solutions by partnering with the Linux community in the Linux-HA project. The Linux-HA project provides reliable, easy to use high-availability eServer solutions which provide great value to customers in many industries and application types.

1.7.2 Mods

eServer Family of Servers and Clustering -- Software

IBM's key strength and differentiator in the area of clustering lies in its clustering software which enables organizations to build, manage and expand clusters efficiently. Cluster-ready software from IBM enables any multiple eServer system solution or cluster to look and act like a single system for end users and system administrators. See Figure 9 below.

Figure 9. eServer Family of Servers and Clustering-- Software

For example, the eServer cluster family of software offers PSSP for AIX (Parallel System Support Programs) as a central point of management control. General Parallel File System (GPFS), available for AIX and Linux clusters, provides a cluster-wide file system allowing users shared access to files spanning multiple disk drives. HACMP for AIX (High Availability Clustering Multiprocessing) allows continuous access to data and applications through component redundancy and failover in mission-critical environments. LoadLeveler is a distributed network-wide job management facility designed to dynamically schedule work on IBM UNIX servers. High Availability Geographic Cluster (HAGEO) and Geographic Remote Mirror (GeoRM) for AIX help ensure that data and business critical applications are continuously available, even when a natural disaster threatens. Cluster Systems Management (CSM) for Linux and AIX, (included with the IBM eServer Cluster 1350 and Cluster 1600, respectively) is also provided to dramatically simplify administration of a Linux cluster by providing management from a single point-of-control.

The zSeries server family offers z/OS Managed System Infrastructure (msys), a major ease-of-use initiative. msys will, over time, enhance the z/OS platform with services and interfaces that make it much more flexible and easier to manage. In z/OS R1, msys for Setup provides the initial framework to help with setup tasks, particularly configuring system components. In z/OS R2, msys for Setup is used by additional exploiting products for their setup. In addition, msys for Operations provides an initial implementation that facilitates operations handling.

In z/OS R2, msys for Setup is used by additional exploiting products for their setup. In addition, msys for Operations provides an initial implementation that facilitates operations handling.

The objective of msys is to make z/OS system programmers and operators significantly more productive, freeing this scarce and valuable personnel for other tasks. In addition, msys reduces the skill requirements associated with setup and operations. The msys initiative strongly contributes to positioning z/OS as a superior and manageable operating system.

The iSeries server family, along with strategic partnering, offers several ClusterProven technologies that intuitively support: cluster creation and deletion of cluster nodes and applications; automatic initiation of a application an data switchover to a backup cluster node; control over the start and stop of data replication for an application; coordination of switchovers between the application and the data; and the start and stop of the cluster-based application.

Under Linux, IBM leads the Linux-HA (High-Availabiilty Linux) project, which provides proven and reliable open source high-availability capabilites for Linux. Linux-HA is available for all IBM Linux platforms: xSeries, zSeries, pSeries and iSeries. DRBD provides advanced data mirroring capabilities for Linux. Support for Linux-HA and DRBD are available from IBM.

Wrap-up

Summary

A clustered computing-based infrastructure cannot always be easily constructed from a single homogeneous building block. Such a simple approach will not deliver the full breadth of capability for all its potential uses -- front-end Web serving, business application processing, back-end database serving, data mining, etc. Enterprises must be able to select the various servers that are optimized for each needed function regardless of their origin. And yet when viewed collectively, these disparate servers need to move and respond 'as one' through the various demands of any given day.

Few within the IT world will ultimately escape the need to embrace clustering technology. Nor should they wish to. Today's clustering technologies provide benefits far beyond the mere historical challenge of high-end scalability. These benefits include extreme availability, redundancy/resiliancy/replication, backup, failover, load balancing, automated cross-platform systems management, parallel processing, resource sharing and capacity on demand.

There are many clustering models to choose from, most of which have been illustrated in this course for your better understanding. Your next step, if you're now convinced that clustering is for your organization, should be to begin gleaning an even more in-depth framework for what clustering can do in your particular environment. Be sure to review the list of clustering resources that are listed in Appendix A of this course.

Appendix B: Miscellaneous cluster terminology

High-availability cluster multiprocessing (HACMP) -- a set of independent systems (called nodes) that are organized into a network to share resources and communicate with each other. The result is optimized application execution and scalability. The implementation of HACMP also protects against unplanned outages and server downtime. Appcleaner 3 5 mac.

Clusters 1 7 2 0

Server cluster -- is a group of computers that are connected in such a way that they act as a single server. And are usually housed together in a single location. In an enterprise network, a server cluster (or server farm) delivers centralized access control, file access, printer sharing, and backup for workstation users. Each server within the cluster may have individual operating systems or a shared operating system and may provide load balancing when server requests are peaking beyond normal capacity. In a server farm, if one server fails, another acts as backup.

Web farm -- is a cluster of Web servers that provide load balancing support for one or more Web sites. Internet service providers (ISPs) routinely use Web farms to deliver their Web hosting services.

1.7.2 Minecraft Download

Colocation -- is the provision of space for a customer's server equipment on the service provider's premises. For instance, some Web farms allow you to put your own server on their site.

1.7.2 Forge

Cluster node -- refers to any server that is considered a member of a cluster. Each node will need to be assigned a cluster node name, which will be associated with one or more Internet Protocol (IP) addresses. This is because cluster communications (within an IBM cluster environment) will use the TCP/IP protocol to establish communications paths between cluster services on each node in the cluster.

Clusters 1 7 2

UNDER MAINTENANCE